I am a Member of Technical Staff on the Deep Research team at OpenAI, working on ChatGPT agent. Previously, I was part of the Multimodal team, where I contributed to enhancing the visual reasoning capabilities of our flagship models. I earned my Ph.D. from the Robotics Institute at Carnegie Mellon University, where I had the privilege of working with Deva Ramanan. I was a recipient of the Best Paper Honorable Mention Award at ECCV 2020 for Towards Streaming Perception.

My research interests include multimodal perception and generation, long-horizon tasks, and agentic AI.

Selected Projects

Selected Papers

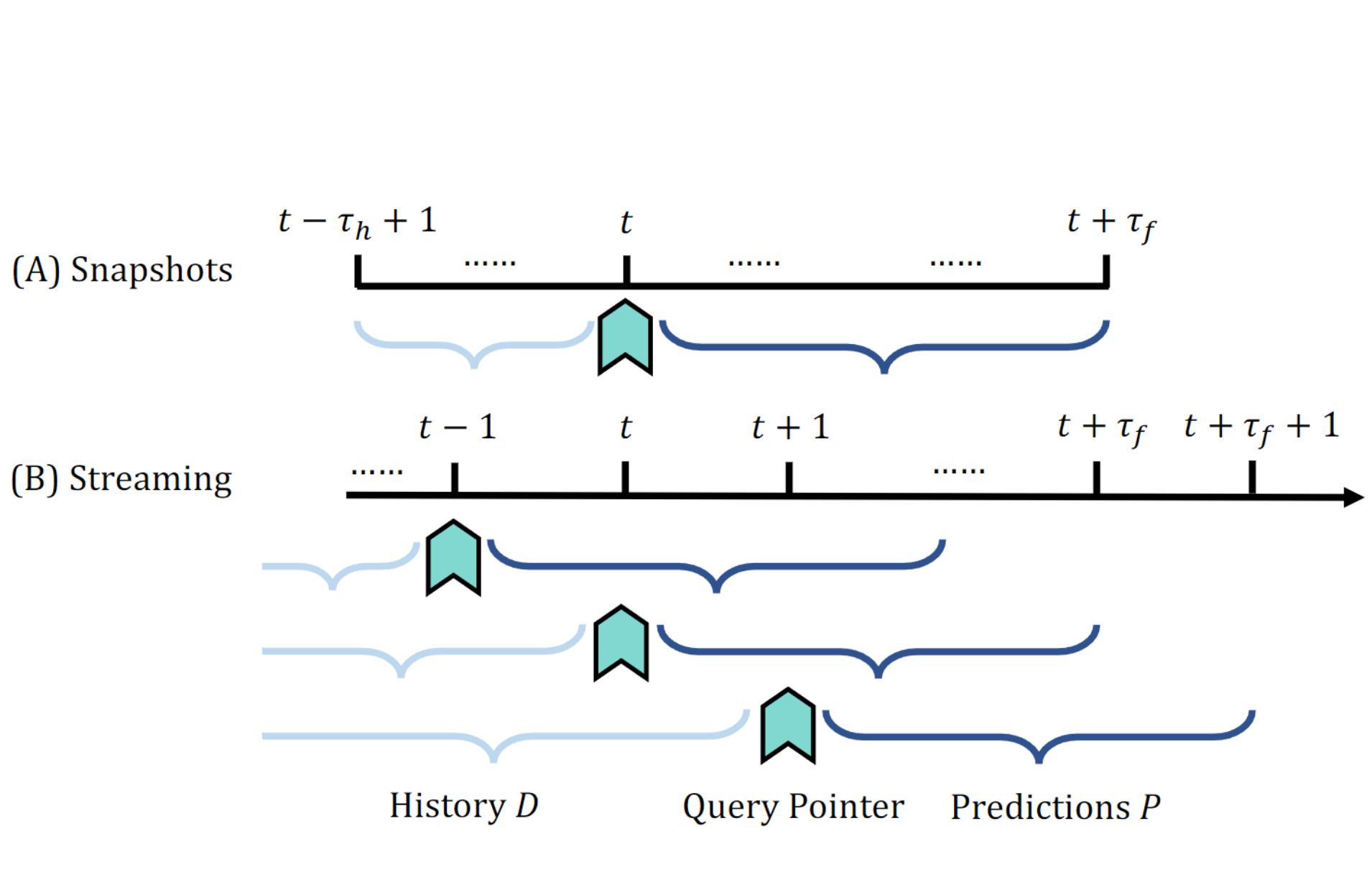

Ziqi Pang, Deva Ramanan, Mengtian Li and Yu-Xiong Wang. Streaming Motion Forecasting for Autonomous Driving. In IROS, Oct 2023.

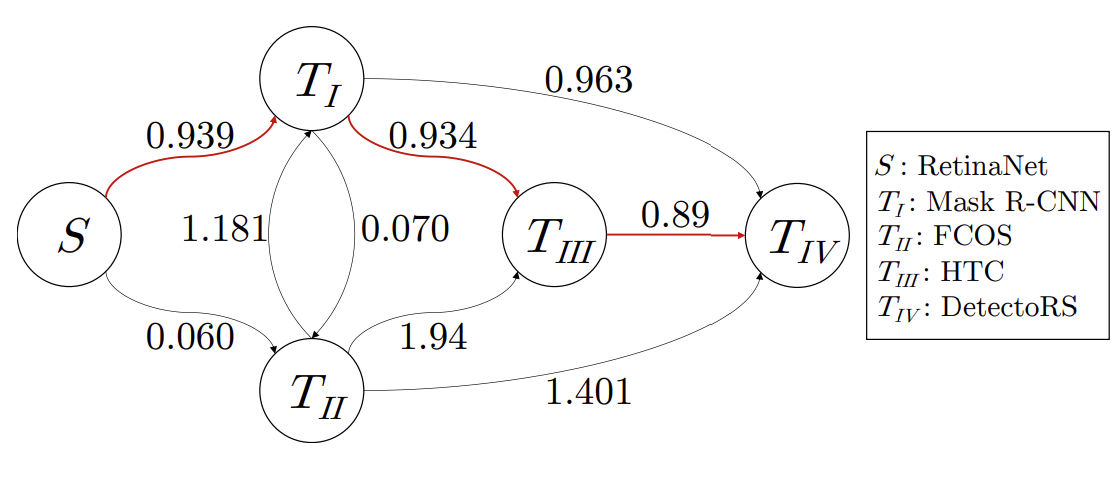

Shengcao Cao, Mengtian Li, James Hays, Deva Ramanan, Yu-Xiong Wang and Liangyan Gui. Learning Lightweight Object Detectors via Progressive Knowledge Distillation. In ICML, Jul 2023.

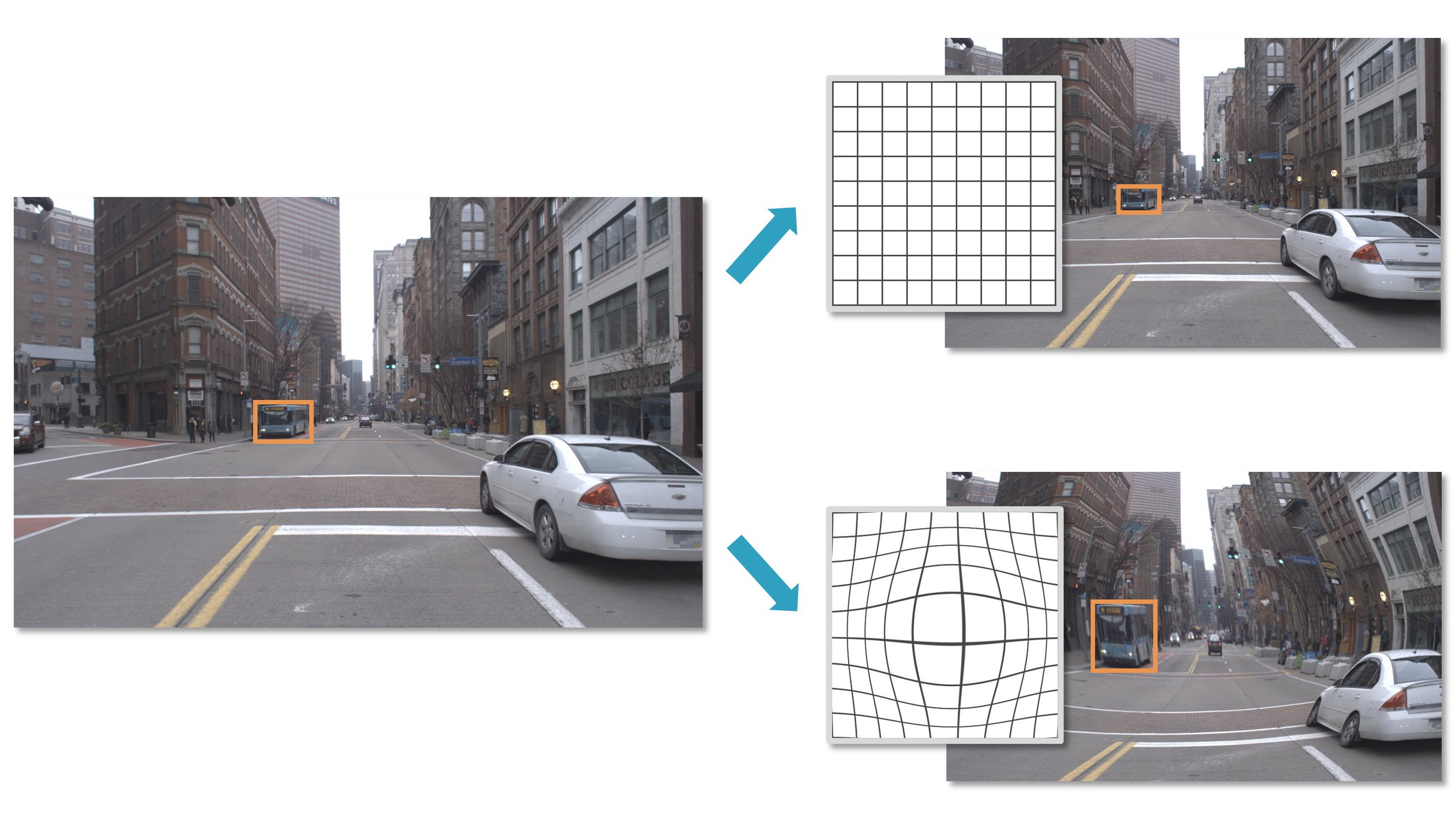

Chittesh Thavamani, Mengtian Li, Francesco Ferroni and Deva Ramanan. Learning to Zoom and Unzoom. In CVPR, Jun 2023.

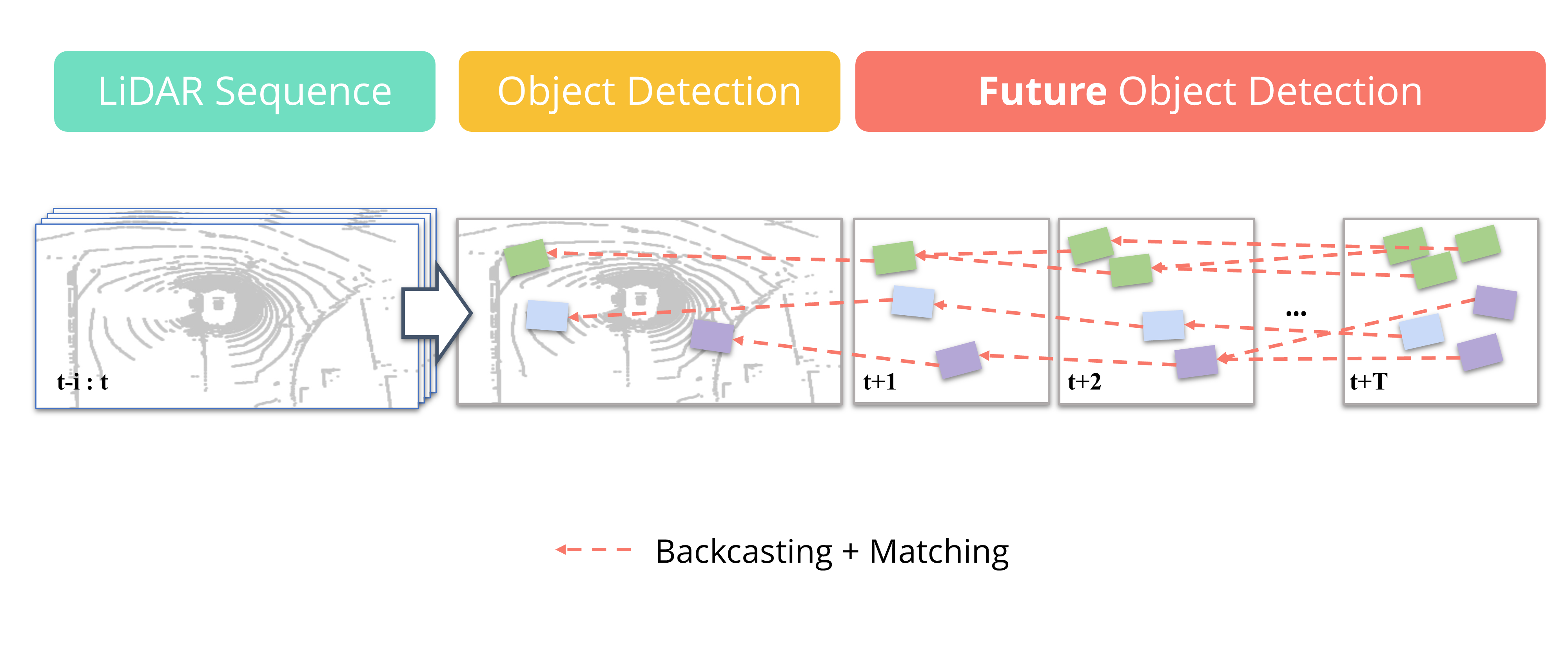

Neehar Peri, Jonathon Luiten, Mengtian Li, Aljosa Osep, Laura Leal-Taixé and Deva Ramanan. Forecasting from LiDAR via Future Object Detection. In CVPR, Jun 2022.

Chittesh Thavamani*, Mengtian Li*, Nicolas Cebron, Deva Ramanan. FOVEA: Foveated Image Magnification for Autonomous Navigation. In ICCV, 2021.

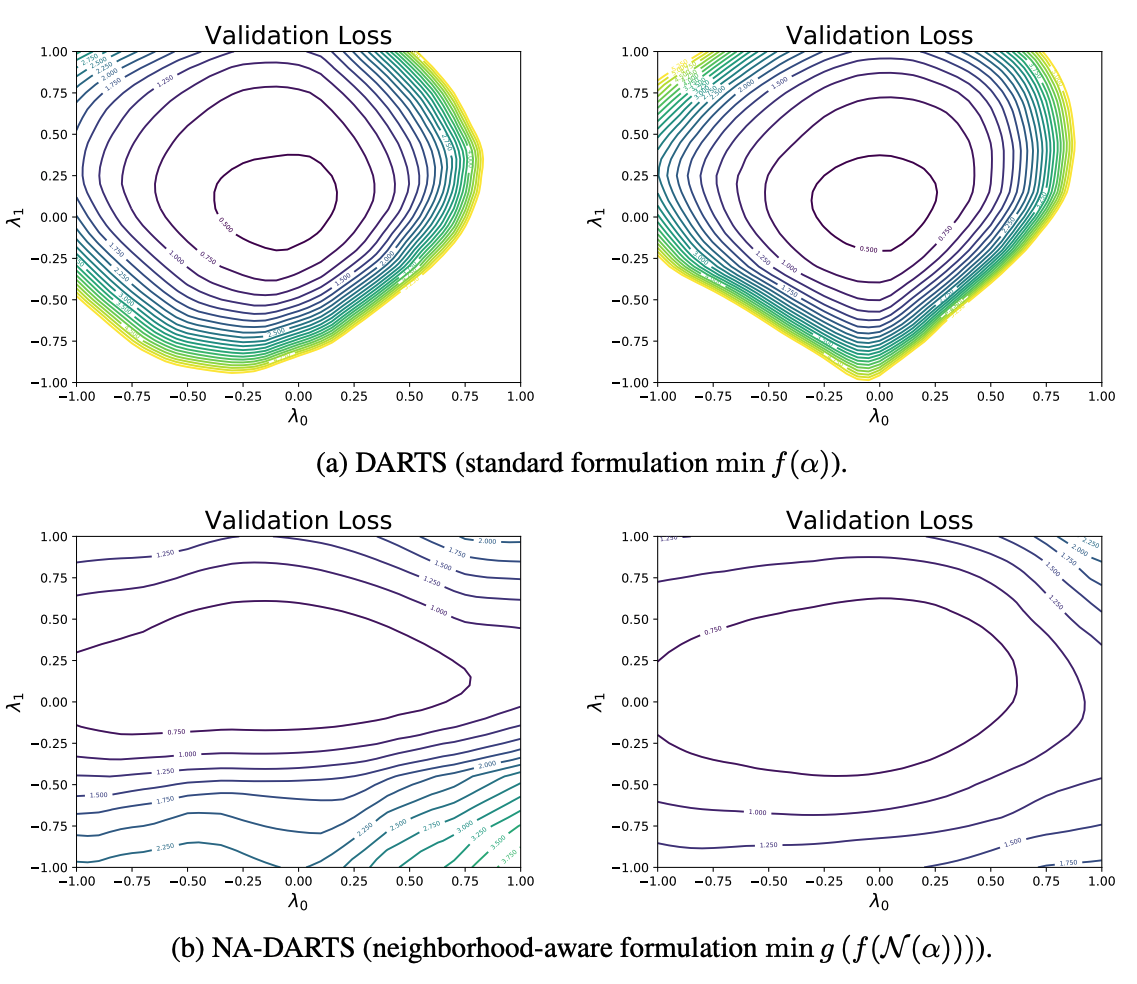

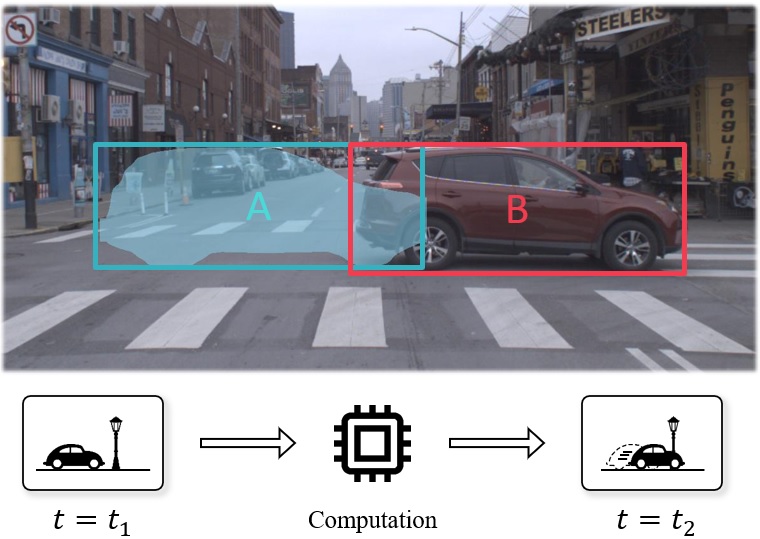

Mengtian Li, Yu-Xiong Wang and Deva Ramanan. Towards Streaming Perception. In ECCV, 2020.

Best Paper Honorable Mention

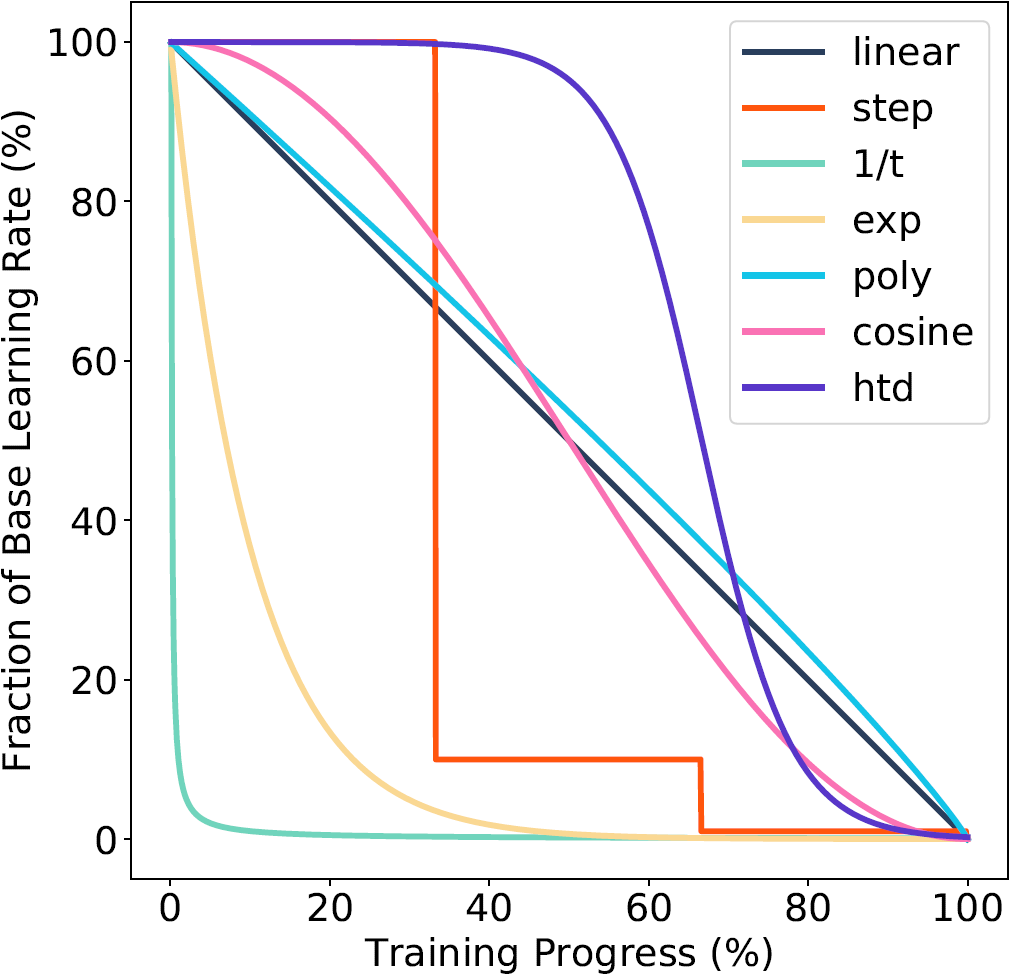

Mengtian Li, Ersin Yumer and Deva Ramanan. Budgeted Training: Rethinking Deep Neural Network Training Under Resource Constraints. In ICLR, 2020.

Mengtian Li, Zhe Lin, Radomír Měch, Ersin Yumer and Deva Ramanan. Photo-Sketching: Inferring Contour Drawings from Images. In WACV, 2019.

Misc

Confucius (孔子) once said: “if a craftsman wants to do good work, he must first sharpen his tools (工欲善其事,必先利其器).” I find that this concept also applies to research. Over the years, I have created various tools related to my research and I have some of them open sourced on Github:

- HTML4Vision: a python-HTML-javascript tool for visualizing datasets, comparing algorithms and making figures

- MTCMon: a web-based cluster resource monitor (widely adopted at CMU RI)

- DLGPUBench: a latency-focused GPU benchmark for deep learning